In 2016, a 12-year-old named Taylor Cadle reported to the Polk County Sheriff’s Office, in central Florida, that she had been raped by her adoptive father. The detective investigating the case didn’t believe her, and Taylor was charged with filing a false police report, a first-degree misdemeanor. As part of the terms of her probation, she was required to write an apology letter to her adoptive father. Soon after, he abused Taylor again—and this time, Taylor took photos and video of the incident on her phone. Taylor’s evidence led to her adoptive father ultimately getting sentenced to 17 years in prison.

But, as Rachel de Leon and I detailed in an investigation last fall, the fallout from the case was minimal: Sheriff Grady Judd, a charismatic, tough-on-crime influencer with more than 780,000 TikTok followers, never apologized to Taylor or acknowledged the case publicly—despite his often-repeated phrase, “If you mess up, then dress up, fess up, and fix it up.” When we published our piece, the detective investigating the case, Melissa Turnage, was still on track to become sergeant. (After the story came out, she was required to complete a weeklong online course on interrogation techniques.)

“I thought you guys were supposed to help?” Taylor Cadle wrote to Sheriff Grady Judd in October. “Not silence a victim.”

The Center for Investigative Reporting’s story, which aired on PBS NewsHour and published in Mother Jones, led commenters to flood the sheriff’s office’s TikTok and Instagram pages with comments demanding justice for Taylor. But soon after being posted, many of those comments mysteriously disappeared from view, prompting more anger.

Screenshots from TikTok, Facebook, and Instagram appear to show the sheriff’s office has been routinely filtering out comments criticizing its handling of the case. Recently deleted comments suggest that it is automatically removing comments that include the word “Taylor” from public view.

Noting the disappearing comments back in late October, Taylor decided to send Judd an email directly. She admired his work overall, she wrote, but she was outraged. “I thought you guys were supposed to help? Not silence a victim.”

“I shared my story to bring light to the situation, in hopes for a change within the system,” Taylor said recently. “Yet I’m still being pushed to a dark corner with no acknowledgement whatsoever. [Judd is] choosing to turn a blind eye towards the situation—which feels similar to my 2016 case when I was pushed away.”

The sheriff’s office didn’t respond to multiple requests for comment.

Katie Fallow, the deputy litigation director at the Knight First Amendment Institute, where she focuses on threats to free speech on social media, says that the sheriff’s office appears to be engaging in “viewpoint discrimination,” a violation of the First Amendment in which a government entity treats speech differently depending on the opinion it expresses. “They don’t want people to post comments that criticize the police department’s handling of this situation, and they’re using custom keyword blocking, if that’s true, to just always hide those comments,” Fallow says. “I don’t see how they could justify that.”

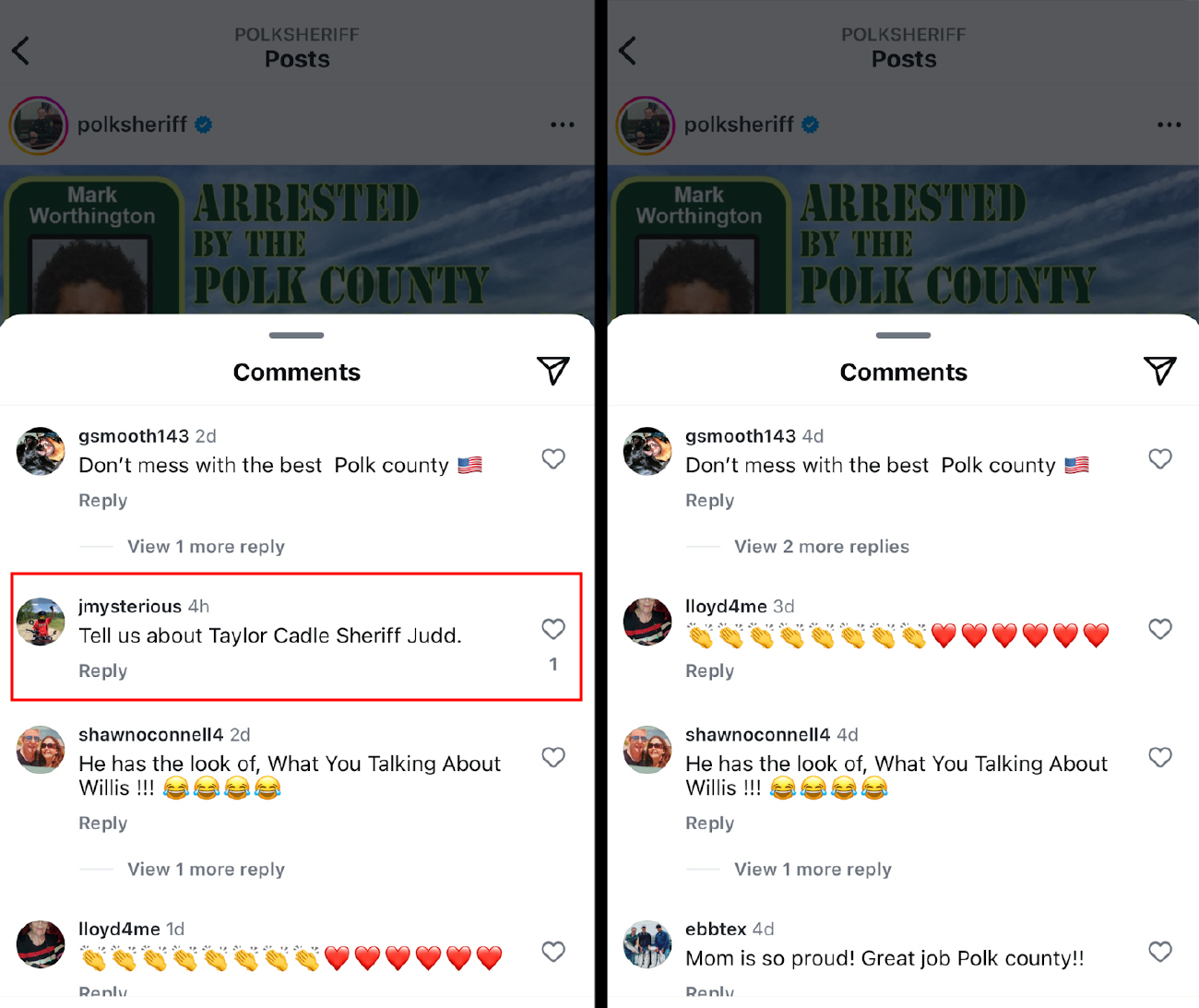

On November 3, five days after the PBS NewsHour story aired, an Instagram commenter wrote, “When are you going to apologize to Taylor Cadle?” On a separate post, another commenter wrote, “Tell us about Taylor Cadle Sheriff Judd.” Within days, both comments disappeared from public view.

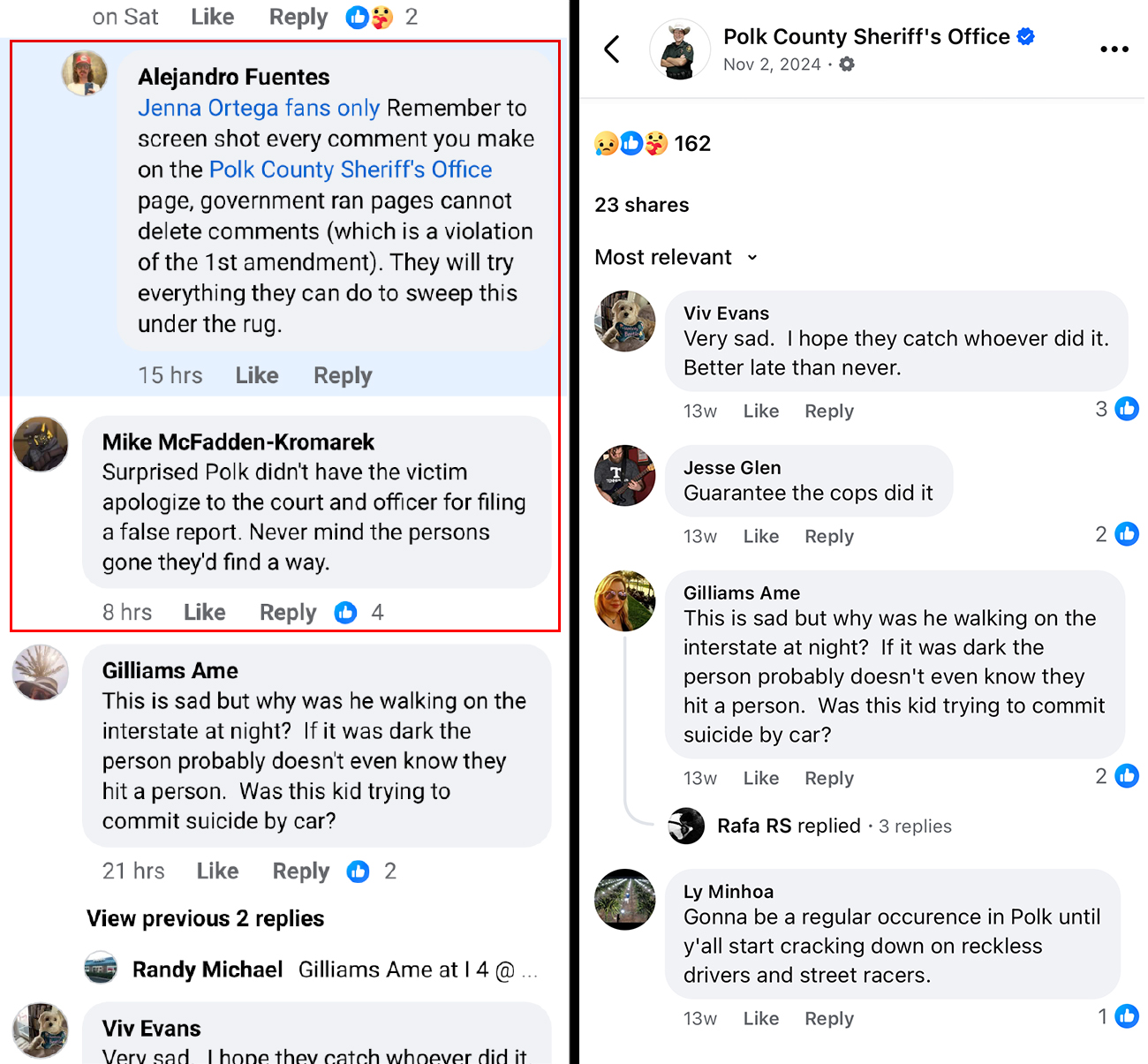

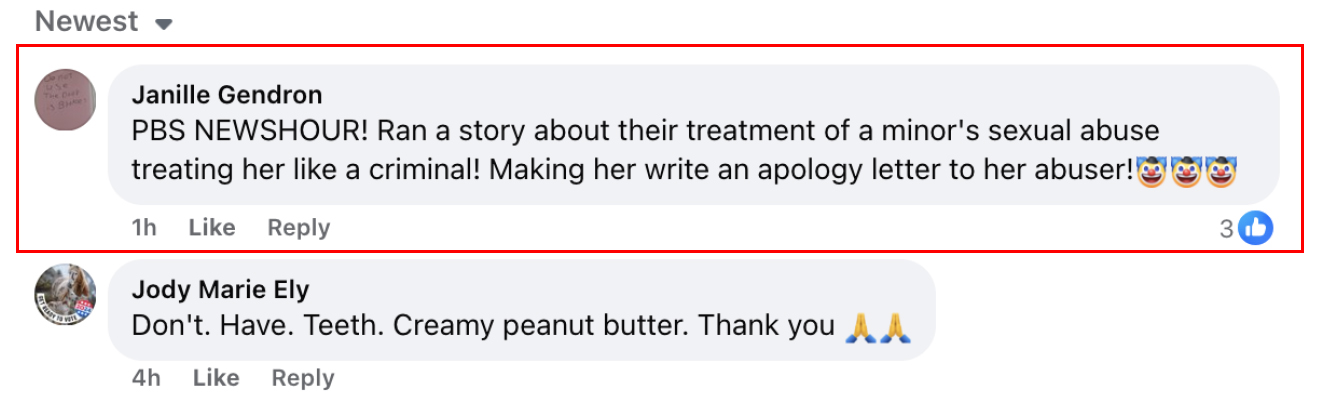

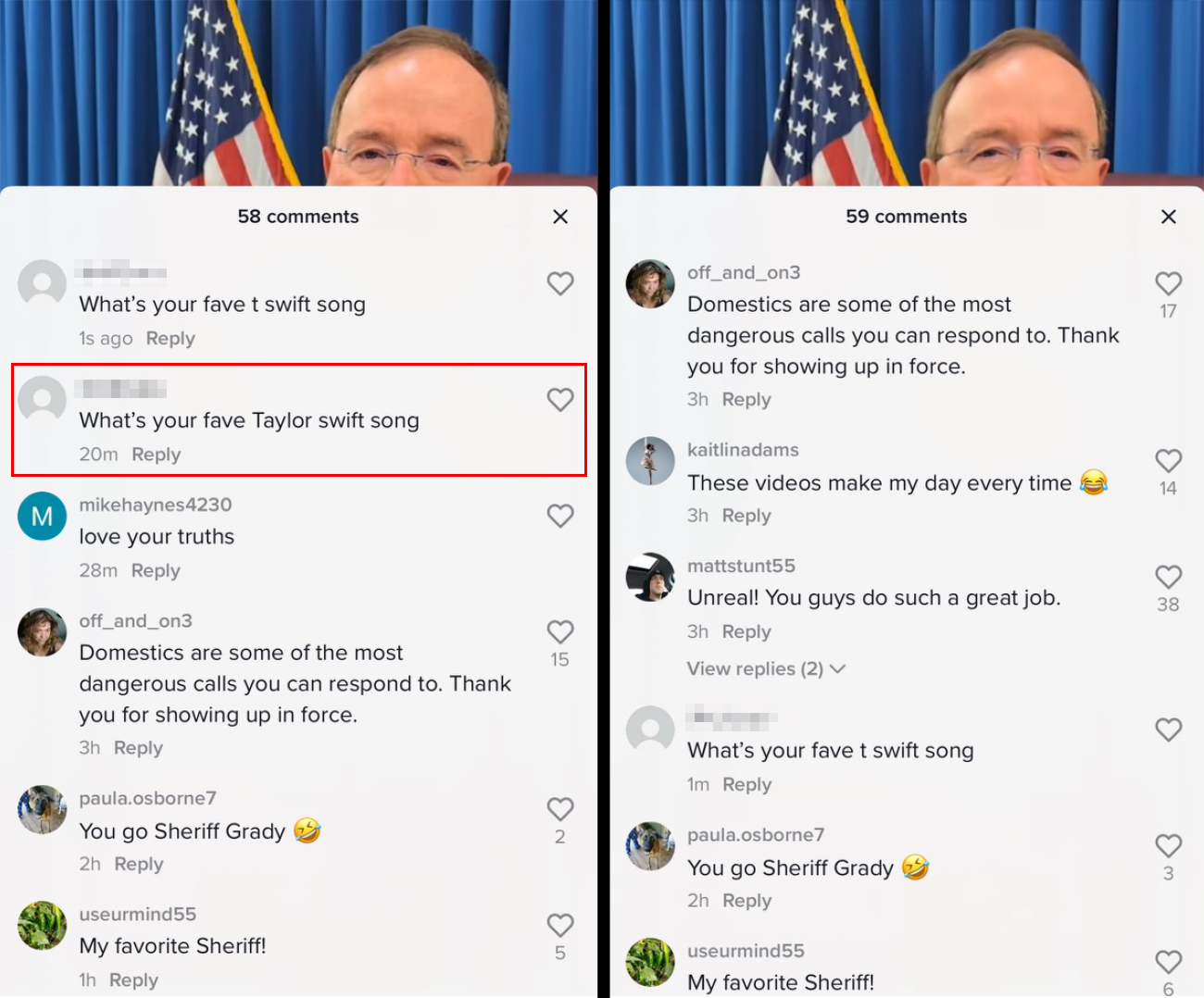

In the images below, the comments in red rectangles are no longer visible:

On Facebook, several comments about Taylor’s case—including a comment about the disappearance of other comments (“They will try everything they can do to sweep this under the rug”)—also disappeared.

In several cases, those who posted the comments can still see them, but the public cannot. Some commenters took notice. “I’m confused,” wrote one person in early November. “43 comments and the public can access only 2.”

One commenter who wished to remain anonymous said after he first heard about the story, on PBS NewsHour, he went to the sheriff’s TikTok page to join the many people who were commenting about the case. “It really was just a crazy story,” he says. “I figured if I post too, it helps with the visibility.” But he was surprised when his comment, about the fact that Taylor was made to apologize to the man who abused her, disappeared. He commented noting that his posts kept disappearing, and that comment, too, soon vanished.

In January, he started experimenting with TikTok comments, and he noticed a pattern: Comments with the word “Taylor” appeared to automatically disappear from public view, though they were still visible to the commenter. His comment reading, “what’s your fave taylor swift song” immediately disappeared, but “what’s your fave t swift song” stayed up. Similarly: “Justice for Taylor” was taken down, but “j u s t i c e f o r t a y l 0 r” (using a zero in her name) stayed up.

The commenter was taken aback by what appears to be an automatic filtering of Taylor’s name. “It’s one thing if they don’t apologize and pretend it didn’t happen,” he says. “They can’t be forced to apologize. But it’s another thing for them to just be blocking this. There’s so much irony there, it’s crazy.”

The sheriff’s office’s social media guidelines note that it reserves the right to remove comments that are inappropriate or offensive, including “disruptively repetitive content,” or “are off-topic or not related to the content within the post, or any content posted on the platform.”

“I think there’s a version of that [rule] that is legitimate,” says Vera Eidelman, a staff attorney at the American Civil Liberties Union. “But I also think that exactly these kinds of off-topic rules are ones that can get abused very easily because they’re incredibly discretionary and they can be used as pretext to shut down conversations that the government doesn’t like.”

Fallow notes that, if the office is removing the word “Taylor” because it’s not on topic, it would have removed other posts as well (such as the “t swift” comment).

The issue at hand is more than simply a matter of a handful of erased comments, says Fallow. “It’s a big deal, because social media accounts run by government entities have become the sort of digital town hall where people can hear from their public officials and talk with each other about how their government is working and criticize the government,” she says. “And those are core principles at the heart of the First Amendment, which is engaging in speech and speaking to other citizens about public policy. It’s on a new medium of communication, but it absolutely has a fundamental impact on free speech and the right to engage in democracy.”

Rachel de Leon contributed reporting to this story.